Motivation

In modern software development, Open-Source Software (OSS) stands as a cornerstone, embodying

- collaboration,

- innovation, and

- accessibility.

Defined by its freely available source code, OSS has revolutionized the way software is created, distributed, and maintained. Its significance lies not only in its cost-effectiveness but also in the vibrant communities that rally around these projects, driving continual improvement and evolution. From the smallest startups to industry behemoths, OSS permeates virtually every facet of tech stacks, from web development to data science and DevOps. Popular frameworks like React, Angular, and Vue.js dominate front-end development, while Node.js powers server-side logic with unparalleled efficiency. Mobile app development owes much of its success to libraries like React Native and Flutter. Infrastructure as code tools such as Terraform and Kubernetes thrive on the open-source ethos, enabling scalable and resilient architectures.

Yet, amid the widespread adoption of OSS, security remains a paramount concern. Regular security audits and vulnerability scans are imperative to identify and mitigate potential threats lurking within codebases and dependencies. These proactive measures serve as bulwarks against malicious actors and coding errors that could compromise the integrity of applications. Moreover, staying updated on security patches is non-negotiable.

The discovery of vulnerabilities by security researchers or malicious actors is inevitable, but timely patch management can mitigate their impact by a high degree. Often, the solution is as simple as upgrading to the latest version of the affected software component. By embracing best practices in automated dependency management, development teams can harness the transformative power of OSS while safeguarding against security risks.

In the following article we will:

- Dive into the essentials and tactics for mastering automated dependency management, with a spotlight on Renovate.

- Unpack the complexity of managing a project that spans over 500 repositories and challenges it brings to the automated dependency management.

- Explore how Renovate empowers teams to create software that is both robust and secure against the backdrop of a rapidly changing tech environment.

The rise of vulnerabilities and why automated dependency management is a clever idea

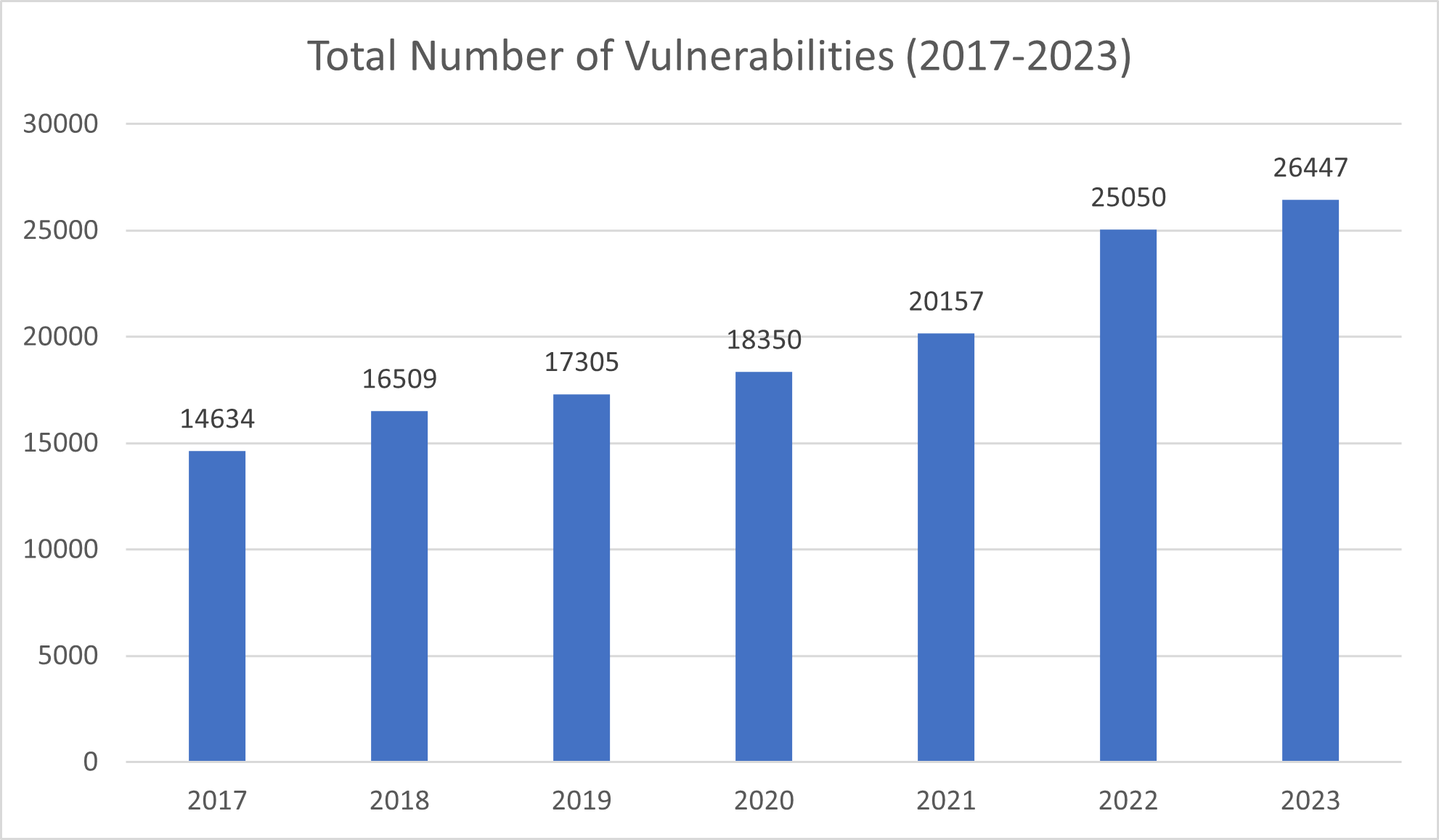

In the past years, there has been a consistent rise in the number of software vulnerabilities, as illustrated in the chart below. Manual dependency updates struggle to keep pace with the increasing number of software vulnerabilities, which peaked to over 500 per week in 2023. This volume overwhelms manual processes, making them insufficient in ensuring the security and compatibility of software projects. With developers focusing mostly on the functional part of their software projects, the tedious task of tracking and updating dependencies manually is often being pushed further down the backlog.

During this time critical vulnerabilities can slip by unnoticed, leaving applications exposed to exploitation. Moreover, ensuring compatibility with each updated dependency further complicates manual efforts, leading to delays, errors, and increased risk. As such, the limitations of manual dependency management emphasize the need for automated solutions capable of efficiently addressing security concerns while maintaining compatibility.

By quickly integrating the latest versions of dependencies, developers can stay ahead of potential threats, fortifying their applications against security risks. Automated dependency management is a systematic and proactive approach to handling the dependencies of a software project automatically. Its primary purpose is to ensure that an application’s dependencies are up-to-date, secure, and . To ensure effectiveness and efficiency, this process must encompass both aspects of dependency management: identification and integration.

Besides mitigating the risk of vulnerabilities and zero-day exploits by promptly updating dependencies, automation also greatly improves time and resource efficiency, allowing developers to allocate their efforts to more feature-focused tasks. Streamlining the process and reducing manual efforts ultimately leads to higher-quality software products with faster time-to-market/feature updates.

Prerequisites

While the benefits of automated dependency management are undeniable, its implementation requires certain prerequisites:

- Continuous integration (CI) pipelines facilitate automated testing and integration of code changes, seamlessly incorporating dependencies into the build process.

- Reliable deployment mechanism enables a swift and automated deployment of updated applications.

Available tools and why we chose Renovate

Automated dependency management is facilitated by a range of tools designed to streamline the process of updating software dependencies. Snyk and Depfu are among these tools, both offering automatic pull requests to update dependencies in git repositories. Renovate and Dependabot, on the other hand, provide more comprehensive solutions. Both support multiple package managers and allow for customizable configuration, enabling automated pull requests tailored to specific project needs.

Our project’s scope encompasses an extensive setup with seven GitHub organizations and over 500 repositories, including a diverse array of microservices, frontend applications, and infrastructure-as-code (IaC). This setup uses various programming languages, such as Java, JavaScript, TypeScript, Python, Golang and more. The scale and diversity of our project necessitate a highly adaptable and scalable tool for managing our software dependencies efficiently while maintaining a strong security posture.

While Dependabot provides seamless integration with GitHub, Renovate’s flexibility, customization options, and wider range of supported languages and package managers, including Helm charts, made it the tool of choice for the project we wish to share experiences from, balancing both usability and security needs.

Integration of a Renovate bot

Integrating the Renovate bot into GitHub offers developers an automation for dependency management, with two available options:

- utilizing the Mend Renovate GitHub application or

- self-hosting a Renovate instance.

The GitHub application is the recommended and straightforward approach for most projects. Sadly, security policies discourage the use of GitHub secrets in certain regulated industries through security policies, making dependency management with Renovate incompatible with private package registries like e.g., Artifactory. To cope with this situation, we have chosen to utilize a self-hosted solution. A Renovate-Docker-image within a GitHub Actions pipeline and enhanced with additional scripts.

Challenges with self-hosting Renovate

For teams looking to adopt Renovate in the way we have chosen for our recent project, several challenges lie ahead. In the following, we will share the five most interesting ones and provide solutions that worked for us.

Setting up scheduled Renovate runs

Scheduling GitHub workflow runs is a simple and well documented process.

name: Run Renovate

on:

schedule:

- cron: "0 3 * * *" # every day at 3AMEverything works as it is supposed to, until the account used to set up the schedule is disabled. Then the scheduled workflow will not start anymore. This can be quite common in companies with a high number of developers – a person leaves, and their GitHub account gets disabled. However, this feature is not as well documented as one would expect. We found a possible root cause and a way to fix it in one of the GitHub discussions. In summary, the person who enabled the workflow or last modified the cron expression becomes an “actor” and must have an active GitHub account for the scheduled workflow to start. However, utilizing a technical user account and/or moving towards a centralized solution with a single schedule configuration, as discussed in the following sections, can help mitigate the impact of such occurrences.

Default GitHub token and its limitations

During our earliest iterations, we opted to use the default token that GitHub provides when running GitHub actions. Quickly, we discovered multiple limitations of using said token.

Most importantly, any pull request created using such a token was not able to trigger any workflows. This restriction is enforced intentionally by GitHub to prevent creating recursive workflow runs by accident. However, this eliminates a significant part of the automation that is required from an effective automated dependency management solution, namely ensuring compatibility.

Many software projects use their CI pipelines to automatically build and test their application, and ours was no exception. The developers were forced to test compatibility of each updated dependency manually before merging the changes into the default branch, or simply merge the proposed changes, close their eyes, and hope for the best. Of course, neither option was acceptable, and we had to start using a Personal Access Token (PAT) with broader permission scope than the default token was able to provide, while adhering to the principle of least privilege and the organization’s security policies.

By using a PAT, it is possible to encounter a similar issue as with scheduled workflow runs. Personal Access Tokens lose access to repositories if their owner leaves the organization. We managed to overcome this obstacle by creating a technical user, inviting it to all our GitHub organizations and leveraging its Personal Access Token to run Renovate.

The default GitHub token lacks the necessary permissions to access repositories beyond its own. By utilizing a PAT, we were able to overcome this limitation. This enabled us to extract common baseline configurations and establish custom configuration presets in a dedicated repository. This approach streamlines maintenance processes, making it easier to manage and update configurations across multiple repositories.

{

"$schema": "https://docs.renovate.com/renovate-schema.json",

"extends": ["local>example-org/central-renovate-configuration"]

} Over 500 repositories across multiple GitHub organizations

Managing/maintaining/working on a project of such scale, spanning multiple GitHub organizations, and encompassing/containing over five hundred repositories, presented even more unique challenges. Our first approach was to create a GitHub Action in each repository. Despite utilizing reusable workflows down the road, this approach proved to be unsustainable. It required too many manual steps for the developer teams to onboard a new repository:

- Create a branch,

- create a new file,

- copy & paste the code,

- commit the changes,

- create a pull request,

- get an approval from another developer,

- merge the pull request,

- trigger the new workflow manually,

- and lastly, approve and merge the onboarding pull request created by Renovate.

Performing these tasks across more than 500 repositories increases the workload, despite their seemingly simple nature when considered individually. Furthermore, it caused inconsistencies in configuration among the repositories.

Migrating to a centralized solution gave us more control over the configuration, schedule, and onboarding of new repositories as depicted in the next subchapter.

Error Prone Unresilient Pipelines of a Centralized Renovate Instance

The work needed for a developer to onboard a new repository got reduced to merging a single pull request, which is significantly less than creating a whole new workflow considering the total number of repositories within our project.

The architecture of our centralized solution is in fact quite simple. A single repository with a single workflow and utilizing the technical user’s PAT with access to every organization and repository. This is possible due to the auto-discovery feature of Renovate. After enablement, Renovate scans all available repositories before proceeding with its primary task of identifying and updating outdated dependencies.

A high number of repositories complicates things further. The single workflow run analyzing all available repositories takes approximately 8 hours to finish in our example, which is reasonable when scheduled at night, but considering Renovate’s sequential processing of repositories, some repositories might not be processed at all if the workflow is stopped or aborted due to errors. Re-running a job that takes eight hours is not feasible, especially while being exposed to the same risk of failure again.

To enable Renovate to run in parallel, we can leverage the option of auto-discovery; ‚writeDiscoveredRepos‘. With this option enabled, Renovate does not process any repositories. After the discovery stage, all found repositories will be written in a JSON file before the workflow halts.

We can utilize the generated JSON file and use it as input for a subsequent job parallelizing using a matrix strategy, splitting the list of repositories into several batches. We use an undocumented built-in function of jq, ‚_nwise()‘, which does exactly what we need. The final step is then to tell Renovate what repositories to process. This can be achieved via the environment variable RENOVATE_REPOSITORIES.

This solves the problem of an unreliable centralized scanning workflow but introduces the risk of exceeding API request limits.

Exceeding API Request Limits with Parallel Execution

Using parallel execution, it is quite easy to exceed the maximum API request limits allowed by GitHub. Hence, we further restricted the maximum parallel matrix jobs to five after testing several values. This appears to be a sweet spot for the best performance while still conforming to GitHub API limits. Additionally, by disabling the fail-fast option, we ensure the remaining batches of repositories can continue being processed, after one of them fails.

Auto-discovery and preparation job

prepare_renovate:

name: Prepare Renovate

container: renovate/renovate:37

outputs:

repos: ${{ steps.prepare-output.outputs.repos }}

steps:

# checkout code, install jq, etc.

- name: Auto-discover repositories

env:

RENOVATE_AUTODISCOVER: true

run: renovate --write-discovered-repos="repos.json"

- name: Prepare output

id: prepare-output

run: |

chunked_array=$(jq -c '[_nwise(10) | join(",")]' repos.json)

echo "repos=$chunked_array" >> $GITHUB_OUTPUT Actual Renovate job using matrix strategy

run_renovate:

name: Run Renovate

container: renovate/renovate:37

needs: prepare_renovate

strategy:

fail-fast: false

max-parallel: 5

matrix:

repositories: ${{ fromJson(needs.prepare_renovate.outputs.repos) }}

steps:

# checkout code, set up env, etc.

- name: Run Renovate

env:

RENOVATE_REPOSITORIES: ${{ matrix.repositories }}

RENOVATE_AUTODISCOVER: false

run: renovate Collaboration and Communication

Supporting several teams in a GitHub organization with a high number of repositories brings many stakeholders to the table. It is important to recognize that security is often not the priority or functional requirement in software development projects, especially for developers or project owners. Therefore, support from higher management levels and extra effort allocation may be required to prioritize security initiatives, in cases when they are not initially planned.

Establishing and enforcing policies for timely security updates is crucial to maintaining the project’s security and stability. By prioritizing regular updates and ensuring compliance with established policies, teams can mitigate the risk of vulnerabilities and maintain a robust security posture. Furthermore, effective communication with the development teams regarding dependency updates is essential. Clear channels of communication enable teams to coordinate efforts, share insights, and address potential issues promptly. Encouraging collaboration between development and security teams facilitates the identification and resolution of potential issues early in the development process, shifting left on security while minimizing the impact on project timelines.

Conclusion

Despite encountering several challenges, automating dependency management by self-hosting Renovate presents valuable benefits, including improved security and efficiency, making the effort worthwhile. Success hinges on having a solid structure and architecture that fit the organization’s needs. However, it is essential to remember that our approach must be flexible and aligned with the corporate policies.

However, it is crucial to recognize that automated dependency management alone does not serve as a silver bullet for ensuring an application’s security. Complementary security measures and best practices, including regular security audits, penetration testing, and comprehensive employee training, must be diligently considered. By cultivating a culture of collaboration, communication, and continual improvement, developers can significantly augment the security, reliability, and overall quality of their software products.