Continuous Integration, Continuous Deployment (CI/CD) and especially pipelining are not new in professional software development. However, for some parts of the ETL world CI/CD has continued to stay a rather elusive topic that is not yet completely understood and rarely applied in full. As a result, integration and deployment are often done manually and are therefore cumbersome, time-consuming and error-prone.

A solution to this issue is often not trivial, as it is hindered by commonly occurring obstacles. It might be the lack of technological maturity of many proprietary low-code platforms or, even worse, a business’ inability or resistance to implement the required organisational changes. In the following, we will outline a possible solution based on a pattern that we implemented in an exemplary project: Continuous Integration and fully automated deployment of ETL software via Gitlab.

The Situation

Let’s assume the project’s architecture consists of several self-contained systems (SCS), each of which is sourcing data from external systems using a dedicated ETL software. The project’s agile setup utilises a Gitlab pipeline for iterative deployments, meaning that short release cycles and automated deployment procedures were mandatory for all parts of the application – including the ETL component.

However, like many other comparable tools on the market, our ETL tool ships with a non-integratable, proprietary version control system (VCS) that is deeply rooted in many of its core features. As a result, the deployment process also differs from CD pipelines one would employ when using VCS like git. For these reasons, there often is surprisingly little automation of the deployment process in real-world projects. This forces businesses to employ specialised ‚deployment experts‘ who handle the process manually, which makes the operation error-prone, slow and expensive.

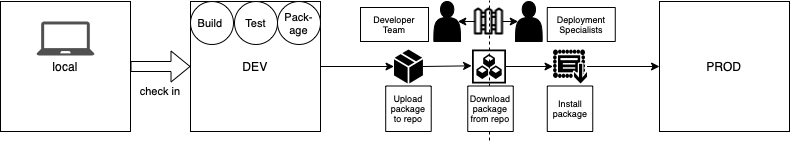

An exemplary deployment process is shown in figure 1. Consider that new code has been developed locally. This code is checked-in into a repository where the ETL ecosystem is hosted. Now the ETL tool’s VCS is used to build, test and package the new code. The check-in is performed semi-automatically (or even completely manually) by the development team. The created package is uploaded to a public repository (or comparable) from which a deployment specialist can install the new code manually in the production environment. As you can imagine this process is not fun for anybody, but has to be endured to be able to deploy to production.

Fig.1: Exemplary deployment process within the ETL tool’s ecosystem

A further limitation of the proprietary nature of the ETL tool lies in the visualisation of code changes. Said tool utilises a graphical development environment which is in stark contrast to text-based, third-generation programming languages such as Java or Kotlin. It is therefore not compatible with the typical line-by-line comparison in code reviews and therefore makes it even harder to integrate.

What did we do?

To overcome the described insufficiencies we decided to roll up our sleeves and build something on our own:

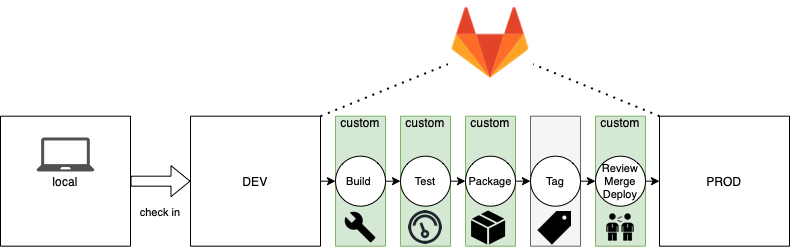

We started by conceptualising the basic lifecycle stages: Build, Test, Package, Tag, Deploy. Consequently, we introduced Gitlab to our deployment process and customised the lifecycle stages as shown in figure 2. As described before, changes to the code are made locally and checked into the development environment, where the ETL ecosystem is hosted. However, at this point we are not solely relying on the ETL tool’s ecosystem anymore. Instead we set up the stages such that the ETL VCS is contained within the Gitlab repository.

Fig2.: Schematic of the developed deployment process integrating Gitlab via customised lifecycle management stages

Build stage: For the build stage, we had to implement a custom builder that is capable of executing all steps required for building the new application in the ETL tool’s ecosystem within the ETL tool, which executes all required steps to build the new application. The build process can be triggered and monitored with the Gitlab console and does not require access to the ETL tool itself.

Test stage: The test stage is using a specifically designed testing framework for automated tests, which we spoke about in our last blog entry. In short: It sets up a dedicated test environment including a database for each loading process, runs the process itself and compares the results to expected values that can be defined within the framework. At the end of the test execution a test report is generated and uploaded into the repository as part of the software release.

Packaging stage: As already outlined, the packaging of our ETL code previously happened either manually or semi-automatically by executing a sequence of commands within the tool’s ecosystem. We extracted and generalised the core commands which are required for the packaging of the code and provided a dedicated Gitlab stage to execute the resulting script.

Tagging stage: While our ETL ecosystem is shipped with tagging capabilities, we added an additional git tag to our pipeline. This tagging strategy enabled us to synchronise the two VCSs at play, while it further allowed us to strictly associate all additionally created files, such as test and change reports, with the new software version.

Review, Merge, Deploy: Since the Gitlab project now contains deployable packages, we are able to deploy these to any other environment using the same pattern that we used before: Open an ssh connection to the production environment, build and deploy the package via scripts that we extracted from the well-known deployment process. Surely, organisational hurdles like compliance and security aspects have to be satisfied in order to be able to access production systems in this way. One of the aspects that need to be considered is that a code review process has to be established and documented within Gitlab. As already mentioned above, the tool does not support line-by-line comparison of code changes. To circumvent this drawback, we designed a dedicated tool that integrates with the ETL tool’s native VCS and visualises the code changes in a human-readable format. We are now able to perform proper code reviews within Gitlab.

Ok nice … but is this really worth the effort?

Yes, absolutely! While it definitely took a couple of weeks to integrate our ETL tool with the Gitlab ecosystem and though the solution is far from perfect, the result proves that this undertaking’s resources were well-spent: We moved the deployment away from a small circle of specialists and made it accessible and transparent to all team members having access to Gitlab. This led to broader acceptance of the ETL tool as well as enabling the teams to synchronise deployments of several software components more easily. Another advantage is that the time it takes to deploy new software to production was dramatically reduced from several hours to a couple of minutes(!) which encourages short release cycles.

In the end, we proved that with some creativity, persistence and understanding of Gitlab one can solve a problem that was a major design flaw within the industry’s development process – widely accepted as uncomfortable yet unchangeable. If a project’s value is measured by the amount of times the question is asked “damn, why haven’t we done this earlier?”, this one would certainly be at the top of the list.