Morten Hesebeck-Brinckmann

Senior Consultant

The idea of intelligent machines entices the mind and fuels imagination. The buzzword Artificial Intelligence repeatedly appears in media reports, company briefings, or start-up pitch decks. Artificial intelligence is also clearly on the rise when it comes to industrial adoption. It is expected that by 2030 70 percent of all companies will have adopted at least one of the artificial intelligence applications of robotic process automation, virtual assistants, natural language, computer vision, or machine learning. It is therefore all the more important that companies can leverage AI technoology. The following article examines how a company can identify a suitable AI Business Use Case and implement it.

Artificial Intelligence is a collaborative endeavor with divided responsibilities. The overall team consists of the data scientist, data engineer, machine learning engineer, software engineer, business analyst, domain expert, and the project manager. The product manager is involved in all processes and focuses on coordinating the team. The data scientist prepares the necessary data and develops the machine learning model. The data engineer also prepares data and deploys the machine learning model. The domain expert also helps with data preparation and, and besides that focuses on business domain related processes. The machine learning engineer takes care of data preparation, training the machine learning model, and works on the model deployment together with the software engineer and data scientist. The business analyst takes responsibility for business understanding. Since the project manager and business analyst do not necessarily come from the IT area, communication is essential.

Artificial Intelligence projects begin with the identification of a suitable use case. A machine learning case needs to consist of a solvable problem for which appropriate data is available or can easily be gathered. Repetitive tasks with statistical components have a high chance of being an AI Use case.

Most AI projects use over 100.000 Data Samples. Data scientists distinguish between unstructured data, semi-structured data, and structured data. Structured data can be imagined as data in a spreadsheet that follows a structure. Unstructured data has no structure, such as for example movie files. Semi-structured data is partially structured, like for example e-mails, in which the message content is unstructured, but the sender and receivers are structured identifiers. For an Artificial Intelligence to work, there must be correlations in the data that it can predict.

The data management process starts with the collection of the data. Often data is located in different data warehouses, programs, or tables. Companies need a database system to store their data. The database system makes their data centrally accessible. Companies often use different database types simultaneously in a database architecture. The core reason for data quality problems is often an inefficient data aggregation process. Different data formats can often be repaired but a severe inconsistency and lack of integrity are more challenging to change. Data quality problems reduce the data pool because uncleanable data gets removed. Missing data is often replaced through substitute data. After the data quality is assured, the data set gets split-up into training and evaluation data. The evaluation data is used to validate the machine learning model, after the training is finished.

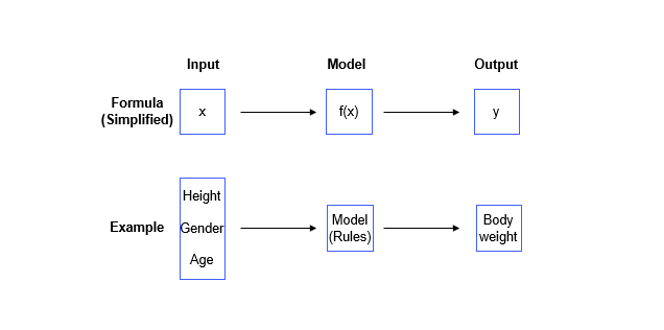

After data gathering, cleaning, and splitting of the data, the company can move on to creating the machine learning model. A machine learning model gets provided with input data, analyzes the data through a function, and shows an output value. The choice of programming language, framework, or machine learning software depends on the team’s familiarity, the budget, and consideration of ease of use versus performance.

During the model creation, the team needs to decide if they want to develop their model in the cloud or locally. Machine learning model training usually happens with supervised learning, unsupervised learning, or reinforcement learning. Training of the Machine Learning Model typically begins with the selection of the model type and class. The model type can be a regression model for numeric values, a classification model for values belonging to a pre-selected value set, and a binary model for values that only have two states. Very popular model algorithms are tree-based models, linear models, and neural networks. Decision tree models are used for classification tasks, and categorize data through a tree like structure of nodes. Linear models predict values by adding weights to data features and adjusting the weights for optimal results. Neural networks have similarities with human brains in structure and design and can teach themselves features from new training data.

To evaluate the model performance, the project team must evaluate the key performance indicators of Machine learning models, such as area under a curve, precision score or recall metric. The key-performance indicators measure different scores, regarding the models accuracy.

At the start of the model training, the model is provided with the prepared training data. It then learns to interpret patterns in the data using selected algorithms. The input source for the data needs to be specified as well as how often the model reiterates over the data and how data sequences get randomized. Often multiple models are trained and compared with each other.

Simplified ML Model

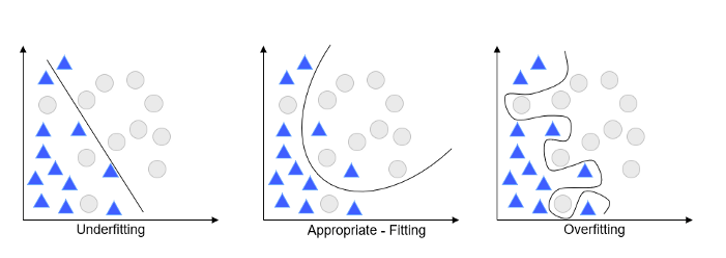

In order to get the best results out of the training data, the team must do feature engineering and select variables that can influence the output results from the training data. During training different machine learning models, are compared through various machine learning key- performance indicators. A common mistake that occurs during training is underfitting. If the model is underfitted, it is not able to detect a trend in the dataset. Underfitting can be mitigated by increasing the training time or adding more electric neurons to increase the model complexity.

Another common problem is overfitting. If a machine learning model overfits, it can not generalize because anomalities of the training data influence its output. Overfitting can be prevented by increasing the dataset size, changing feature selection, stopping the model earlier during training, or using ensemble learning.

Overfitting, Appropriate Fitting and Underfitting

Once common errors are solved, the machine learning model enters the validation process. In the validation process, it gets tested if the model works on unseen data. Different models get compared with each other during the validation process, and the validation process is often repeated multiple times. After the validation process, the model creation is finished, and the company can move on to the model deployment process.

Model integration, also called model deployment, is probably the most challenging part of the machine learning process. Data scientists spend 75% of their time on it, and it is the part of the process where most machine learning models fail.

The machine learning model is now in an isolated stage. It works as a stand-alone application, but it is not integrated into the company’s IT infrastructure. It needs to become integrated into all business processes that interact with the model. The system configuration required for the machine learning model deployment consists of the data collection pipeline, feature extraction, data verification, Machine learning code, machine resource management, analysis tools, process management tools, the serving infrastructure, and monitoring infrastructure.

The data — pipeline needs to be connected to the machine learning model via API and vice versa. All other applications involved in the deployment process also need to be connected to the machine learning model via API. The machine learning model is often written in a different programming language than the rest of the IT infrastructure. This problem is usually mitigated through containerization. The machine learning model gets bundled together with all configuration files, libraries, and dependencies so it can run on any infrastructure.

Machine learning models that make batch predictions and analyze data at regular intervals can be deployed differently than models that make streaming predictions and get-fed with near real-time data. Batch prediction models can be fed through an offline data pipeline, while streaming prediction models need to be deployed entirely online. Companies must decide if they want to operate their model locally or in a cloud environment. The decision should be made with scalability, security, data requirements, and costs in mind.

An essential part of the continuous model deployment is also the maintenance of the model framework and its dependencies through updates. Software architecture problems could occur if updates and framework dependencies do not match. Possible support for older machine learning models, frameworks, and libraries should be guaranteed. Due to the CACE Principle (Change anything change everything), processes need to be set up to change the machine learning model on demand. Model deployment is a continuous process and never ends as long as the machine learning model is used.

The deployment process’s difficulty lies in the integration the machine learning model into the companies IT processes. The whole data pipeline system, data validation system, machine learning code, and output rests on different frameworks and languages, which often do not operate seamlessly together. Containerization and cloud computing are helpful but can not always address the complexity and individuality of use cases, because the model gets integrated into an often already existing structure with legacy code and dependencies that were not developed with machine learning in mind.

Overall it is to say that he implementation of artificial intelligence use cases is an intertwined process, in which the individual components of the roadmap must not be viewed singularly but with the influence, they have on each other. It is difficult for companies to implement cost-effective and efficient solutions. If, however, companies understand each part of the roadmap as part of a larger whole and consider all the steps in advance, they can significantly increase their chances of success. The solution for success is a well-coordinated and composed team and an AI forward strategy that sees artificial intelligence as a continually evolving part of a company’s operational infrastructure.