Why Postgres Materialized Views Became Our Go-To Solution for Data Performance Issues

Efficient data processing is essential in today’s software landscape, and our Full Enterprise Operations Platform (FEOP) project exemplifies this need. Our application allows consulting firms to manage their business processes, including employee management, project planning and staffing procedures. It thus requires us to aggregate large datasets which poses significant performance challenges when done in real time. So, we looked for a solution that would significantly improve performance in the context of our tech stack: Next.js, Spring Boot, PostgreSQL, and Redis, which are all orchestrated on a Kubernetes cluster. While we were looking for a solution that involved Redis caching at first, we quickly started to shift our focus towards a more promising way of aggregating and maintaining data using materialized views.

In the following chapters, we share our insights and experiences of why we started to use materialized views, how we use them, the challenges we encountered, and why it is our preferred method for aggregating data in the FEOP project in more detail.

The Why

FEOP requires deriving data from other data that can change regularly. Initially, we took a straightforward approach: aggregate the necessary data on-the-fly with each request. While this method ensured that the data was always current, it came with a significant drawback — performance. As the amount of data to process grew, the performance got noticeably worse, leading to slow response times and bad user experience.

To mitigate the performance issues, we considered using Redis, a fast in-memory key-value store, to cache the derived data. This approach could have helped with our performance issues but would have introduced a new set of problems. With Redis, we’re able to cache the whole data structure, but selecting just a specific subset is cumbersome and slow. Given that, correctly maintaining the cache would also have been rather complicated—consequently we’ve decided to not follow this idea further.

We also considered manually maintaining the derived data: this would still allow us to quickly query it. While seeming promising initially, it soon became clear that maintaining the data ourselves came with its own set of challenges. Race conditions between different replicas (multiple instances of the application run in parallel to avoid downtime if one instance crashes) can easily occur, that would lead to inconsistencies. Keeping the derived data in sync with the source data would involve considerable development and maintenance efforts, so we’ve decided to not go down this path either.

The need for a fast, stable, and maintainable solution led us to consider materialized views in Postgres. Materialized views provide a way to automatically derive data when the source data changes, effectively eliminating the risk of race conditions and reducing the complexity of keeping the data in sync. With materialized views, we could query the derived data and benefit from the performance improvements of caching without the downsides of manual maintenance. Additionally, some team members had previously worked with materialized views and had positive experiences using them. Thus, we cautiously decided to explore this option further.

The How

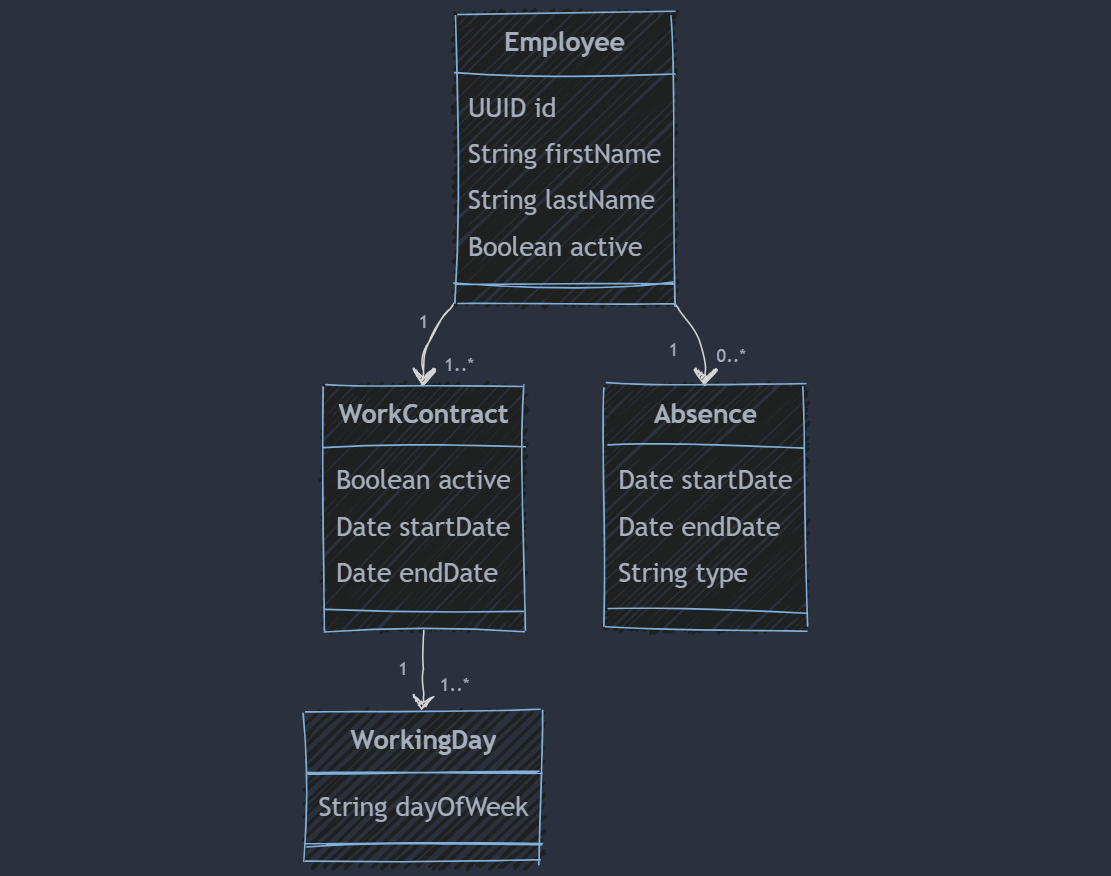

Our application requires us to work with absences that indicate the exact days employees are unavailable. External systems provide various absences as a range that consists of a start date and an end date. These absences are imported as-is. Depending on other factors, like the contractual working days of an employee and the general working days of a company in a country, we need to determine which days an employee is actually available.

Figure 1: General employee data model

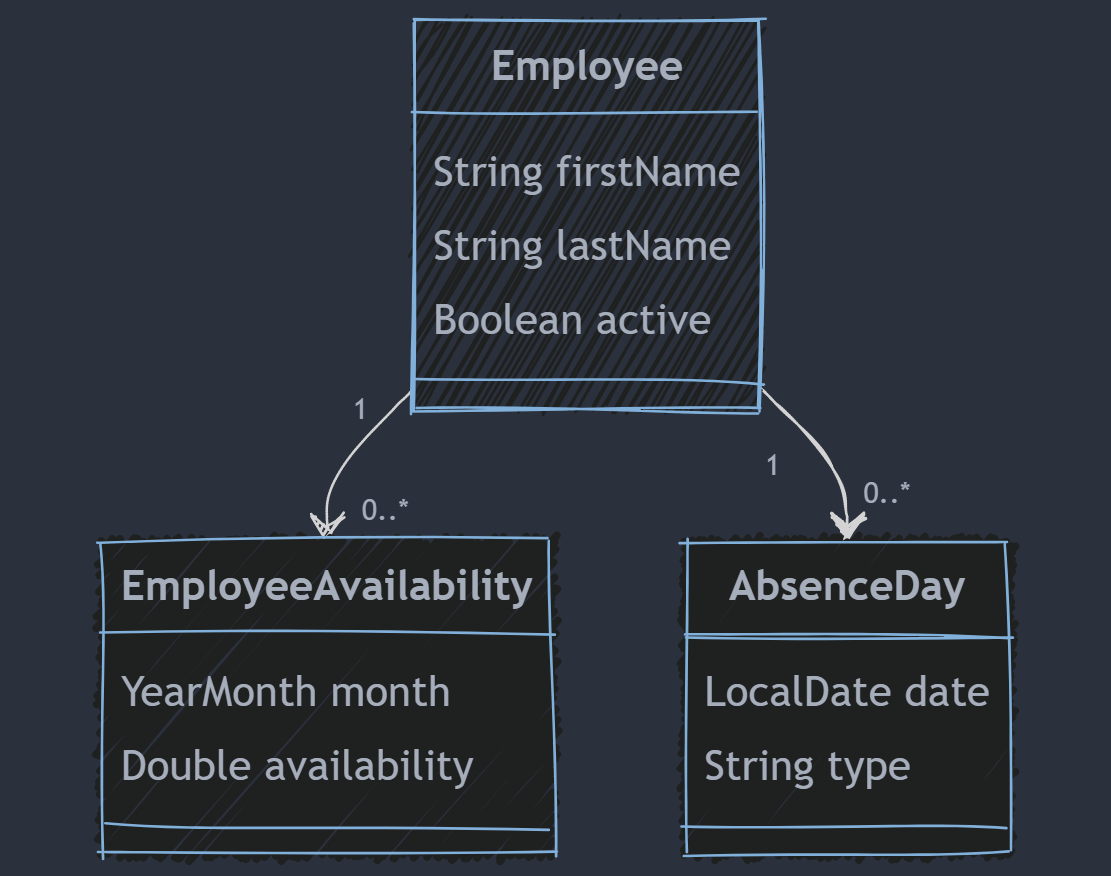

Figure 2: Data model for employee availabilities

The absence days are generated based on the absence range for each active employee and their associated work contracts, and their availability within a month is generated based on the following formula:

EmployeeAvailability = (WorkingDays - AbsencesDuringWorkingDays) / WorkingDays * 100

Here, a working day is specified via the employee’s contract.

Consider the following scenario: An employee that works 5 days a week from Monday to Friday. That would mean July 2024 has 23 working days. Using the previous formula, we would receive the following employee availability: (23 - 0) / 23 * 100 = 100%. Considering the previous parameters, the employee is fully available in July 2024.

Let’s consider the following scenario in which the employee decides to take each Friday off. In that case, they are unavailable for 4 days in July 2024. This would result in the following availability: (23 - 4) / 23 * 100 = 82.61%. This means the employee is unavailable for 17.39% of the month.

Materialized View – Absence day

CREATE MATERIALIZED VIEW absence_day

AS

SELECT

e.id,

a.type,

a.half_day,

ds.date::date

FROM absence a

JOIN employee e ON a.employee_id = e.id

JOIN work_contract wc ON wc.employee_id = e.idMaterialized View – Employee Availability

CREATE MATERIALIZED VIEW employee_availability AS

WITH work_contract_months AS (

SELECT

wc.id AS work_contract_id,

wc.employee_id,

generate_series(

date_trunc('month', wc.start_date),

date_trunc('month', COALESCE(wc.end_date, CURRENT_DATE)),

'1 month'::interval

) AS month_start

FROM work_contract wc

WHERE wc.active

),

work_contract_working_days_month AS (

SELECT

wcm.employee_id,

TO_CHAR(wcm.month_start, 'YYYY-MM') AS month,

COUNT(*) FILTER (WHERE TO_CHAR(calendar_day, 'FMDay') = wd.day_of_week) AS working_days

FROM work_contract_months wcm

JOIN work_contract wc ON wcm.work_contract_id = wc.id

JOIN working_day wd ON wc.id = wd.work_contract_id

CROSS JOIN LATERAL generate_series(

wcm.month_start,

wcm.month_start + interval '1 month' - interval '1 day',

interval '1 day'

) AS calendar_day

WHERE

calendar_day >= wc.start_date

AND (wc.end_date IS NULL OR calendar_day <= wc.end_date)

AND wc.active

GROUP BY

wcm.employee_id,

wcm.month_start

),

absence_days_count AS (

SELECT

ad.employee_id,

TO_CHAR(ad.absence_date, 'YYYY-MM') AS month,

COUNT(*) AS total_absence_days

FROM absence_day ad

GROUP BY

ad.employee_id,

TO_CHAR(ad.absence_date, 'YYYY-MM')

)

SELECT

e.id AS employee_id,

wd.month,

wd.working_days AS total_working_days,

COALESCE(ad.total_absence_days, 0) AS total_absence_days,

ROUND((COALESCE(wd.working_days, 0) - COALESCE(ad.total_absence_days, 0))::NUMERIC / COALESCE(wd.working_days, 1) * 100, 2) AS availability_percentage

FROM employee e

LEFT JOIN work_contract_working_days_month wd ON e.id = wd.employee_id

LEFT JOIN absence_days_count ad ON wd.employee_id = ad.employee_id AND wd.month = ad.month

WITH DATA;The problems

Keeping Data Up-to-date

The main advantage of materialized views also is their biggest downside: its data needs to be kept up-to-date at all times. So, INSERT and UPDATE statements on any source table must trigger the refresh of any related materialized view – causing these statements to become drastically slower. In Postgres, all trigger statements are executed synchronously by default. As a result, inserting a single row can refresh the complete view, resulting in latencies of – in our case – multiple seconds per statement.

Simple views will refresh significantly faster; however, our views are a complex combination of joins and generated sequences, derived from different data sources. Postgres is not able to make incremental updates to these views, but only allows to fully rebuild the whole dataset. This limitation stopped us from using more materialized views in several other occurrences.

We also tried asynchronously refreshing the materialized views, to mitigate this problem. However, this completely broke our end-to-end tests. For example, one test created absences to verify that they are correctly shown in a calendar. If the conversion between absences and days happens asynchronously, then this test would fail as the updated data may not be available yet.

The problem of slow statements became particularly acute in our specific environment. We do a lot of bulk data imports using single-item GraphQL mutations. Then each of these individual requests led to an unbatched insert statement. In an early prototype of our implementation, this caused one import of data to run for 14 hours each night. Offering bulk mutations could have slightly improved the performance, but the amount of extra work drove us to look for other solutions.

Fighting the Race Conditions

Materialized views composed of multiple tables must also have different refresh triggers. However, this can easily lead to deadlocks and concurrency issues. Multiple concurrent REFRESH statements each query the data of the input tables at the time of the refresh, and then update the view using that. However, this causes the different calls to overwrite each other’s results. In our integration tests, this led to occasional, non-deterministic test failures which were a challenge to debug.

Trying to solve this with more restrictive locking was also not an option: Locking one of the input tables during the refresh causes deadlocks if multiple concurrent insert or update statements are performed for the given table. Transaction A might be first to insert data, and then triggers the refresh. However, between it inserting data and triggering the refresh, transaction B might have already acquired a lock for the table to update certain data. In that scenario, both transactions are waiting for each other, causing a deadlock.

Moreover, having interdependent materialized views further aggravates the situation. In our case, the employee availability materialized view was dependent on the absence days materialized view – so changes to one must be reflected in the other. If each view uses a different lock, this can lead to the same kind of deadlock situations encountered above. Therefore, locks need to be carefully chosen.

The Solutions

Manually Triggering Refreshes

After fighting with the performance issues caused by synchronous REFRESH statement for a while, we ultimately gave up and stopped using database triggers to refresh our views. Instead, we used asynchronous, event-based refreshes that are triggered from our Spring Boot application.

In our Redis cache, we started keeping track of which of our materialized views are stale whenever one of the source entities for these tables was updated. Then, after a given interval, we check whether the cache is stale and if yes, update the materialized view. Thanks to the advisory locks (see next section), these actions happen without preventing other transactions from reading data, so our application performance could benefit from the performance gains of our materialized views. For our end-to-end stage, where fresh data is more important than performance, we simply deactivated this scheduling behavior and always synchronously updated the views.

This update mechanism, however, also does not incrementally update the materialized views. We explored this option, but determining whether a change to a given entity would result in an update of a materialized view was more complex than any gain we could receive from that.

Explicit Locking

To avoid the deadlocking issues, we ultimately resorted to explicit locking via advisory locks. Compared to the locks that Postgres can automatically enforce on tables or rows, advisory locks are exclusively user-defined. They allow more elaborate locking mechanisms, just like we needed in our scenario. We specifically used the pg_advisory_xact_lock over the regular advisory_lock as it has the advantage of automatically releasing after a transaction is done.

Using these, we could create one shared lock for each materialized view: Every view receives a custom, unique integer id that is used as the parameter of pg_advisory_xact_lock in the SELECT statement. Consequently, no two REFRESH calls to the same Materialized view can happen at the same time, as the lock prevents other transactions from even querying the input tables. At the same time, our materialized view and all its source tables could still be read from, thereby avoiding any performance degradation in our system.

As deadlock issues are notoriously difficult to debug, we took a few iterations until we ultimately landed on this approach, and it has been working flawlessly since then.

The Conclusion

Implementing materialized views in FEOP was not without its challenges. The initial setup required significant effort, from debugging complex deadlock issues to finding optimal solutions for maintaining both data consistency and performance. However, our investment in overcoming these hurdles has paid off.

The result is a highly reliable and low-maintenance system that now runs smoothly, with materialized views consistently delivering the performance improvements we envisioned. The complexity might seem daunting at first, particularly for those wary of incorporating advanced logic directly into the database. However, as our experience has shown, these fears are largely unfounded. The robustness and efficiency gains far outweigh the initial learning curve and setup complexities.

In the end, we’ve developed a system that not only meets our current performance needs but is also well-equipped to handle future growth. Our journey has reaffirmed the value of materialized views in the right context and has enhanced our team’s confidence in leveraging the database for more than just basic data retrieval. Our materialized views are not just a solution – they are a cornerstone of our system’s architecture, proving that, with the right approach, SQL can be a powerful tool for tackling complex data challenges.