Our authors Pascal and Bastian investigated the Google Software Delivery Shield which is Google’s fully managed solution for software supply chain security. In this article they describe how software supply chain security can be achieved during the different steps of the software development lifecycle with the Google Software Delivery Shield.

Introduction to Software Supply Chain Security

At the end of the year 2021, Log4Shell, a critical vulnerability in Log4j, was detected. Log4j is an open-source logging utility used in many projects in different fields. The economic damage related to that bug was enormous and will still increase within the next years. This is due to the fact, that not all software maintainers are aware of the affected Log4j versions used in their software or they even do not know that the library is used in some parts of their software. The latter was one of the main reasons that the focus on Software Supply Chain Security has increased during the last years.

In the following sections, we will dig deeper into this topic:

- What is software supply chain security?

- How could it have helped in such a scenario as described at the example of Log4j?

Whilst presenting a possible lightweight solution to prevent the outlined scenario in the future: Google Software Delivery Shield.

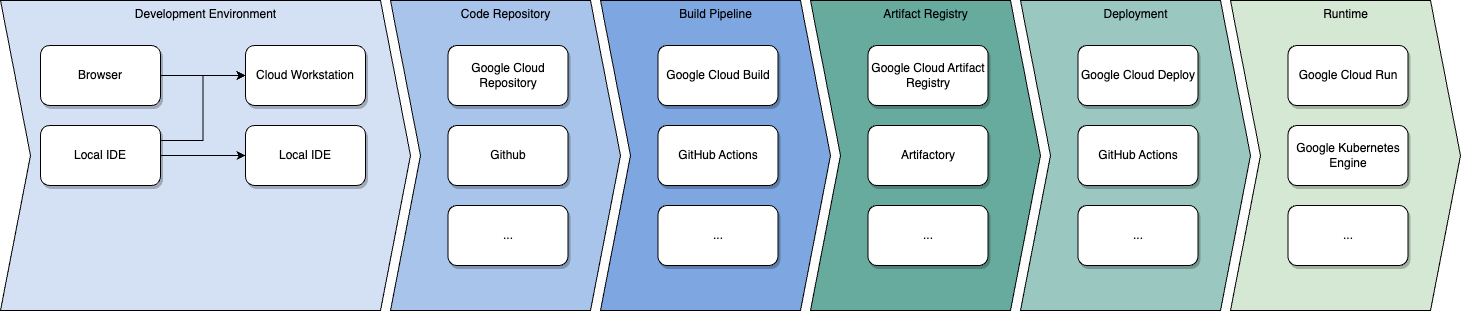

Bringing the vision via code to production requires decisions in six categories as depicted in the diagram.

- Developers need an environment that is balanced between efficiency and security. Access to code must be protected by multi-factor authentication and authorization mechanisms must be fine grained to allow for least privileges.

- Created code needs to be stored in a code repository that supports collaboration and implements mechanisms to protect your different environments against unwanted changes as well as mechanisms that protect both unauthenticated and unauthorized access to deployment capabilities.

- Products need to be built and/or packaged by a build pipeline such that they can run in the desired runtime further down the road. Security scanning mechanisms provide insights to the attack surface of builds and warn the developers by prompts or failures according to corporate policies. Successful checks are persisted securely for later verification.

- Artifacts created from the build pipeline must be stored in an artifact registry, that the runtime environment can access.

- A deployment mechanism must deploy designated artifacts regularly in different environments. Deployments are checked against a set of policies, demanding evidence of authenticity that has been created during the build.

- A runtime environment for artifacts to run in must be set up. Integrity and authentication mechanisms make sure, that only artifacts coming from trusted build pipelines whose attack surface lies within the corporate risk appetite can be run. During the lifetime of artifacts, runtime scans detect vulnerabilities within already deployed artifacts and notify owners, who can decide how to cope with the findings based on severity and impact.

The article will go through each step, present Google’s managed end-to-end solution and share experiences gained during the evaluation in March 2023. The latter date is important, as the Software Delivery Shield was still in preview at that time, with ongoing development.

Supply Chain Levels for Software Artifacts

For classifying the degree of security of supply chains the Supply Chain Levels for Software Artifacts (abbrev.: SLSA) have been introduced. The SLSA specification v1.0 defines four software build levels which we will explain in the following.

Build Level 0: No guarantees

No security concept for the software supply chain exists.

Build Level 1: Provenance exists

A build provenance is a document describing which sources an artifact was built from, which build tool was used with which parameters, who triggered and approved the build pipeline and even more information depending on the platform. The goal is to provide traceable data about software artifacts. Producers of the artifacts can, for example, see the source commit SHA, reproduce the exact same builds by applying the same build tool input parameters documented in the provenance and do much more with it. Consumers, on the other hand, can verify that the software originates from trusted sources. However, it should be highlighted that in SLSA build level 1 the provenance is not necessarily signed, i.e., the document can be forged.

Build Level 2: Hosted build platform

On level 2, the provenance needs to be generated and signed by the build platform itself. By letting the build platform generate and sign the provenance it can be ensured that no hidden actions have been taken during the build process. Furthermore, one can ensure that only trusted build platforms which potentially fulfill certain security criteria have been involved in the software lifecycle. Lastly, the signature can be verified to detect forgery of the document. Hence, producers and consumers can be confident about the trustworthiness and integrity of the software artifact’s origination.

Build Level 3: Hardened builds

Level 3 is very vaguely specified. In short, it requires the build platform to fulfill high security standards where compromising credentials, manipulating artifacts and other attack scenarios are quite unlikely.

Remarks

While achieving SLSA build level 2 requires only low effort, which will be shown in this article, getting to the highest level is very cumbersome, non-trivial and non-static in the sense that security strategies are currently facing fast development. Thus, level 3 is only vaguely specified.

Software Bill of Materials (SBOM)

In the automotive sector, it is obligatory to have a bill of materials for each car model which gets produced. Every single component no matter how small – for example, each screw nut – is listed in the bill of materials along with its exact specification / technical documentation. Why is this done? Imagine, a car accident happens. A subsequent investigation of the root cause shows that one component is deficient. The deficient component can be looked up in the bill of materials, check its charge number and warn all customers which have that component built in their cars. This way more accidents caused by that component can be prevented. Similarly, to harden the software supply chain, it is encouraged to create a so-called software bill of materials (abbrev.: SBOM). It is a document containing a list of all software artifacts and their respective provenances. In case of a detected vulnerability exposure, the affected software artifact can be marked as vulnerable and consumers using that artifact can be automatically notified about the security issue. Making use of this strategy, scenarios like Log4J could be prevented, where even months after the detection of the vulnerability further companies got hacked.

Google Software Delivery Shield In-Depth

After having considered the two concepts of SLSA and SBOM, let us look deeper into Google’s fully managed solution for software supply chain security – the Software Delivery Shield. This will be done by walking through each step within the software development lifecycle and describing what Google offers us to ensure security and trustworthiness in the respective step.

Assured Open-Source Software (AOSS)

Nowadays, most software makes use of open-source products. When building an application, the origin of the dependencies impacts your security posture. In addition to that, the company’s due diligence includes regular scanning, risk assessment and vulnerability management of build artifacts and their sources. This task requires significant effort because of the overly complex dependency structure used in modern software. With the Assured Open-Source Software repository (abbrev.: AOSS), Google offers to take some of those tasks of the plate of CISO organizations or project teams. The contained libraries/modules

- Are scanned and fuzzed regularly,

- Bring their own SBOM and provenances, and

- Come with container analysis API results for metadata, risk assessment and vulnerability alerting via Pub/Sub or email.

The repository can be used during builds as repository for open-source dependencies. Currently over 1000 packages for Java and Python are supported. Google is still actively extending the coverage to support further packages.

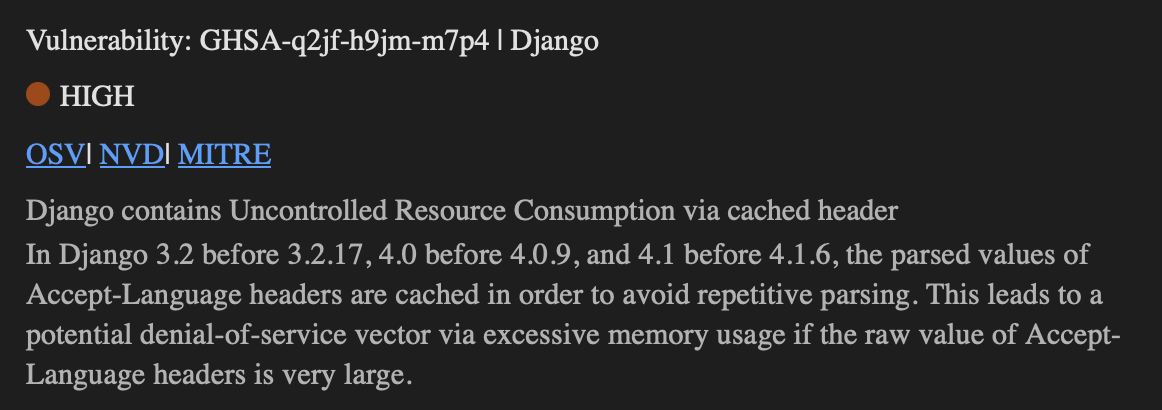

The assured packages are built in Google’s own secured pipelines within Google Cloud Build and are regularly scanned, analyzed and fuzz tested for vulnerabilities. Google sends a weekly Assured OSS alert summary report via email with all detected vulnerabilities in the assured images. An example excerpt of the report looks as follows:

Furthermore, detected vulnerabilities will be reported to the Open-Source Vulnerability Database to encourage maintainers to fix those vulnerabilities in future versions. Additionally, information on existing vulnerabilities in the respective container images are provided together with them in the artifact store. That way, consumers of the artifacts can find all relevant information at one place.

Not only vulnerability reports are provided alongside the artifacts. The assured artifacts come with signed provenances and signed SBOMS to further increase confidence in the supply chain. Google fulfills SLSA build level 3 with its offering.

Using the Assured Open-Source Software repository in projects is simple. The following extract of our example Dockerfile shows the two-step approach to install modules. The requirements are split into two files. One contains those modules that can be sourced from the Assured Open-Source Software repository pinned down by the hash for reproducible builds and integrity protection. The other one lists all modules that need to be installed from external sources. Let us call the first requirements file google-requirements.txt and the latter one just requirements.txt. To be explicit here: The requirements.txt file also contains those modules listed in the google-requirements.txt file. The reason for that duplication will become obvious shortly.

Let us investigate the sample Dockerfile, which is used to build a Python container image using modules from the Assured Open-Source Software repository and the standard PyPi.org repository.

FROM python:3.9-alpine3.16

COPY [ "./requirements.txt", "./requirements-google.txt", "./" ]

# Install OSS from Assured Open-Source Software Repository

RUN --mount=type=secret,id=pipkey pip install \

--require-hashes \

--requirement=requirements-google.txt

--index-url=https://_json_key_base64:"$(cat /run/secrets/pipkey)"@us-python.pkg.dev/cloud-aoss/cloud-aoss-python/simple \

--no-deps

# Install dependencies from AOSS packages and packages that are not present in AOSS

RUN python3 -m pip install -r ./requirements.txt

The first RUN command in lines 6ff is used to install modules from Google’s Assured Open-Source Software repository. This is done by using pip, Python’s package manager, authenticating against the Google APIs with a secret named pipkey. Furthermore, the –no-deps flag is provided since the dependencies are not part of the AOSS repository. The second RUN command in line 13 installs the dependencies which are required by the AOSS modules in the previous step and do not exist in the AOSS repository. Note the missing –no-deps flag here. Additionally, the remaining modules listed in the requirements.txt file but not in the google-requirements.txt are installed.

We were able to confirm, that the most frequently used modules for Python are included within the AOSS repository. Java packages are out of scope for this article.

The usability of the Assured Open-Source Software repository is simple. Given that most companies struggle with trusting open-source software from external repositories, maintaining a security assessment process for open-source software and keeping the dependencies up to date, Google’s repository is a convenient way to go with. The only downside we discovered is that many dependencies of the packages provided are not assured yet. Hence, they are still installed via public repositories which do not ensure such a high security level as the assured open-source software repository does. Thus, the whole supply chain does not fulfill the highest SLSA build level. However, Google focuses a lot on the repository’s extension which is why we can hope that soon most of the dependencies will be assured as well.

Overall, using a cloud native OSS repository with integrated vulnerability monitoring and alerting will reduce the overhead of migrating to the cloud and supports teams in accomplishing security compliance fast.

Development Environment

Before coding can commence, a compliant and secured development environment needs to be set up and provided to the developers. Regulators set ambitious standards that need to be met and project requirements dictate high scalability and stability. Corporate processes require fine grained authorization and an audit trail. Especially, when working with cloud environments, establishing a connection to the necessary endpoints can take a long time.

To cope with those challenges, Google provides Cloud Workstations to enable customers to provision a cloud-based development environment. It is accessible via the browser or a remote connection via local IDEs, e.g. through the Jet Brains Gateway or the VS Code Remote Explorer. The workstations are based on container images, that can be customized further to adhere to specific policies/requirements and to reduce the overhead of the onboarding by preinstalling needed software, tools, and plugins or e.g. removing sudo capabilities. The images run on virtual machines on specific clusters that can be created with the click of a button. The configuration options are the same as in Google Compute Engine. Hence, machine, performance, networking and security settings can be adapted to the company’s needs such that developers get access to compliant, secure, and scalable environments. The integrity monitoring protects against alternation of critical components.

Albeit the vast number of possibilities to secure and prepare the workstations, all the users must do to start writing code is to spin up a workstation based on the provided configuration they have been assigned and start working. If prepared thoroughly, all necessary software and plugins including their configuration are already preinstalled within the image. Users can access the IDE within the browser with the Code OSS editor or by connecting remotely with their favorite IDE running locally. The option to connect remotely may bring further compliance and networking challenges with it, which in some cases can be weighed against developers being more productive in a local setup whilst leveraging the power of the cloud.

During our evaluation, we tested the Code OSS from within the browser as well as remote connection from PyCharm. Both approaches worked well, and no annoying lag was noticeable even with the smallest cloud workstation machine possible. Due to Cloud OSS’s close relation to VS Code, developers can feel at home right away, even though the application does not run on their machine. If more powerful machines are needed, 32 vCPUs with 128 GB RAM and one terabyte of persistent storage can be configured.

Considering the benefits of a fast, easy and compliant provisioning of a scalable development environment for cloud-based projects with innovative security mechanisms and simple configuration, Google’s Cloud Workstations work well from a configuration and usage point of view. The product can be considered a valid option if a company is either struggling to enable developers within their own environment or if a new cloud native approach is complementing the existing corporate structures.

Source Code Management

Developed code needs to be stored securely for collaboration and staging purposes. Access should be limited to project members and mechanisms for promoting changes need to be guarded by processes that fit the company’s risk appetite.

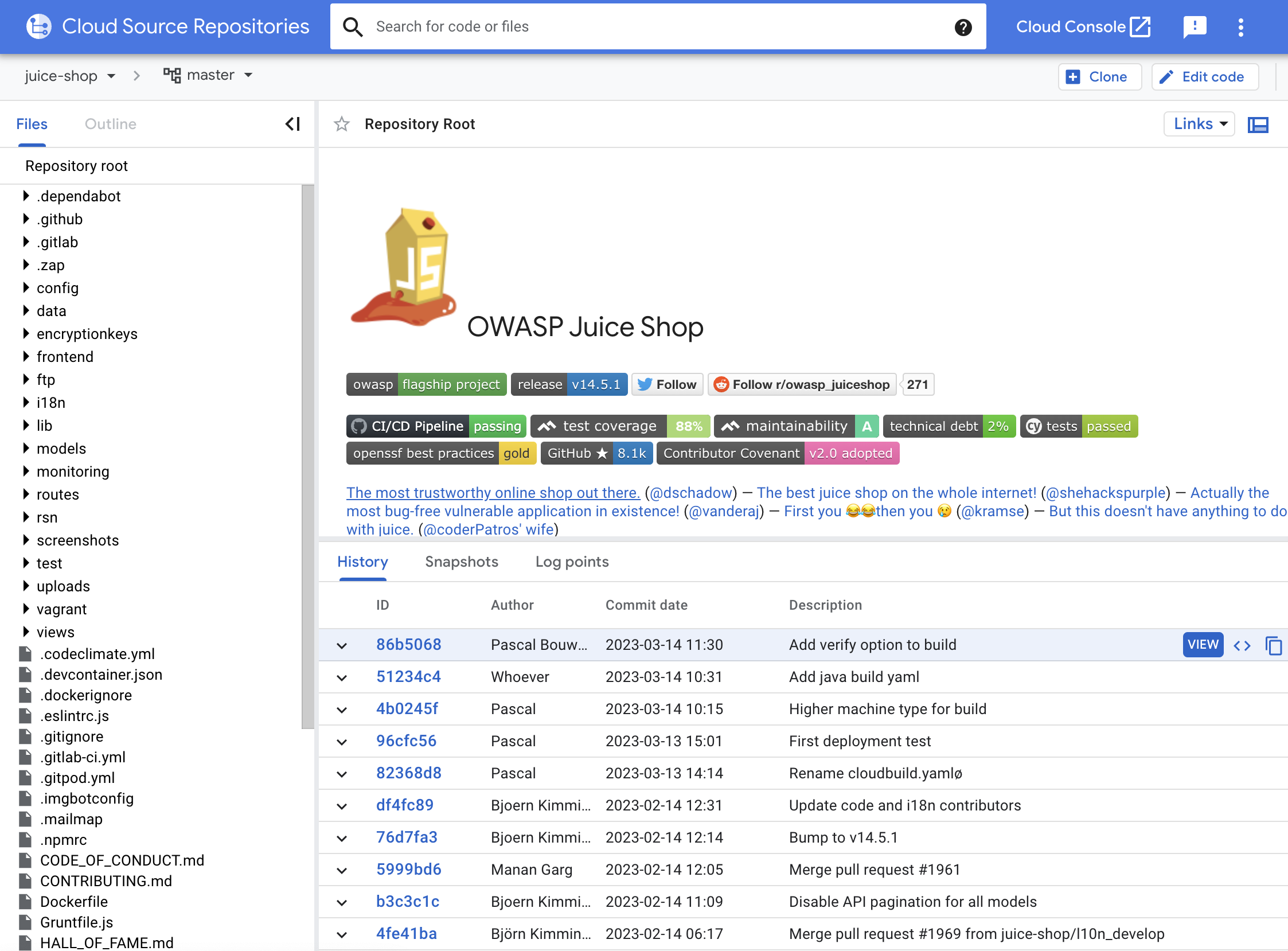

Google’s Cloud Source Repositories offers the expected rudimentary functionalities of a pure code versioning tool. Developers can make use of different repositories, branches and tags. Authorization controls are extremely broad as you can choose different sets of permissions for users depending on their role. Source code can be edited online in the Cloud Shell Editor which is based on VS Code and is shipped with the git extension. Hence, one can manage branches, tags and merges within the UI.

Nevertheless, the basic collaboration functionalities are lacking. Pull requests are not supported and there is no possibility to configure branch protection rules, ensuring that merging into certain branches can only be done via a reviewed and approved pull request. Feature requests to realize these functionalities have already been open for several years with no movement.

Overall, Google’s Cloud Source Repositories cannot be considered as a suitable code repository for most corporate applications since the features it lacks are commonly used throughout the industry. Smaller projects may be able to start using Google’s solution, but will experience the limitations quickly. Thankfully, the Software Delivery Shield suite is flexible in terms of the code repository. Hence, starting by hosting the source code in one of the de facto standard solutions that offer the required collaboration features like GitHub or GitLab is recommended. If specific features require the use of Google’s Cloud Source Repositories, a synchronization mechanism, i.e. mirroring, can be set up between established code repositories and Google’s solution.

Build Pipeline

The build step plays an essential role within the realm of software supply chain security. The developed code is joined with a variety of open source or proprietary libraries from different origins. Each of them potentially brings vulnerabilities into the application. Hence, it is of utmost importance to keep track of all dependencies, to ensure that they are up to date and to track their known vulnerabilities. Not only the versions and vulnerabilities are of interest. The trustworthiness of the build artifact is another desire of their consumers. To tackle all those aspects can be incredibly challenging, especially when the software grows. At this point, the Software Delivery Shield comes into play. The software suite supports teams with several features which make securing the software supply chain easier.

The central software component in the solution of Google which is responsible for the build step is Google Cloud Build. It supports SLSA Build Level 3 builds for container images by generating authenticated and non-falsifiable build provenances for containerized applications (see Artifact Provenance for Container Images). The signed build provenance contains information about the build process and the used builder, in this case Cloud Build. This security feature does not require additional configuration. One just writes the usual YAML configuration file for the Cloud Build pipeline. The provenance creation and signing are done automatically by Cloud Build.

Given that, it is quite tempting to make use of Google Cloud Build. There are different machines available to run the pipelines on. However, only the smallest machine type is equipped with free quotas. Unfortunately, the build process is terribly slow on that machine. We did not investigate the cost of larger machine types, but they will speed up the build most likely.

The Software Delivery Shield does not enforce Cloud Build as a build tool. GitHub is supported, as well. The only setup required is to include the GitHub action slsa-generator for provenance creation. An example can be found in Artifact Provenance for Container Images.

We personally recommend using GitHub in conjunction with the slsa-generator action as we believe, that the user interface and the user experience of GitHub is better suited as the current de facto standard. Nonetheless, it needs to be highlighted that in contrast to GitHub no additional configuration for provenance creation is required in Cloud Build.

Artifact Management

After the build step, the resulting artifact needs to be stored e.g., in an artifact store. When fetching images from an artifact store, consumers want to verify the authenticity and integrity of the artifact. This requires an attestation to be stored along the artifact. Furthermore, consumers want to be confident about using an artifact which contains as few vulnerabilities as possible. Hence, another requirement to the artifact store is to provide vulnerability scanning capabilities and vulnerability reports.

Google offers an artifact store which fulfills the above-mentioned requirements: the Artifact Registry. When the provenance gets generated during the build step, an attestation is created as well which confirms the existence of a provenance including a reference to the latter. The artifact and the attestation are pushed to Artifact Registry.

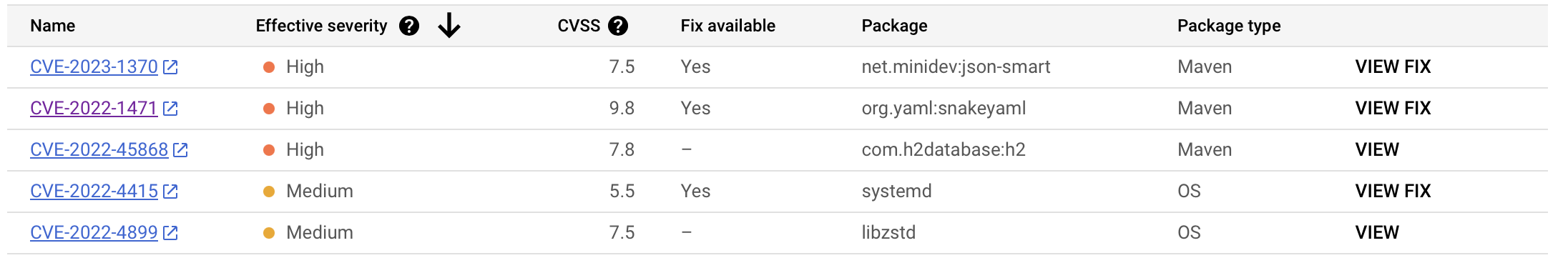

Artifacts in the Artifact Registry are scanned for vulnerabilities regularly. The detected vulnerabilities are listed directly in the Google Cloud Console.

To take advantage of Google’s container analysis feature, the Artifact Registry of Google must be used. To stay informed about the security stance of builds or deployed artifacts, Google offers container scanning for build and runtime. Currently the scanning is limited to

- the OS of the image,

- Java, and

- Go.

In addition to the results depicted in the screenshot above, the Container Analysis API can be queried manually or automatically to gather information on available updates, vulnerabilities or further details as attestations.

If organizations prefer to be notified proactively, there are specific Pub/Sub channels that can be subscribed to. Notifications will be sent when new vulnerabilities become evident or existing information is amended. This can potentially be used to outsource the container scanning completely, a topic many organizations struggle with. If more organizations migrate to such a vulnerability scanning as a service, security information can be shared among the clients and a sophisticated efficient early warning system can be established supporting mitigations in case a catastrophe as the Log4J incident happens again.

Albeit there are many sources for generating findings on vulnerabilities and flaws in images and code, one feature required by many organizations is still missing. Especially in the cloud, there is a relatively new and exceptionally pleasant view on vulnerabilities, resulting in a huge pile of findings that need to be managed. A holistic vulnerability management system that channels all sources and supports processes within the organization is needed to deal with the many findings. Those must be analyzed, categorized, re-assessed, assigned, mitigated and retested. This essential vulnerability finding lifecycle is currently not supported within Google’s Software Delivery Shield.

Layer categorization, detailing in which layer the vulnerability is present (OS, dependency, own code), thus supporting the assessment, is also not present within the toolchain, yet.

To conclude, the tools supplied to support companies in maintaining their deployments are a good foundation with steps in the right direction, but there are still vital functionalities missing.

Sadly, only Maven, Go and OS packages are supported now. Another aspect to keep in mind is that the regular scanning is only performed for artifacts which had been changed within the previous 30 days. Older artifacts are not scanned anymore. Currently, the vulnerability management is rudimentary. One needs to actively look into Artifact Registry for the vulnerability list of each artifact.

Deployment and Binary Authorization

After having built and stored artifacts, the next step is to use them in the deployment step, which holds the next security control. A secure software supply chain requires artifacts to originate from trusted sources. This can be ensured by verifying the digitally signed attestation which has been pushed to the artifact store along with the build artifact itself. For example, it can be verified that a certain build pipeline had been used. All metadata which the build attestation contains can be filtered and verified.

The deployment tool Google offers is Google Cloud Deploy. It natively supports deployments to Google Kubernetes Engine and Cloud Run (serverless runtime). Thus, it is a viable choice when working in Google Cloud Platform. Generally, the pipeline YAML file contains steps for both Cloud Build and Cloud Deploy. However, when making use of attestation verification, the build and deploy steps must be separated into distinct files. This is due to the fact that Google Cloud Build pushes the attestation after the pipeline has finished. Afterwards, the deployment pipeline can start and verify the build attestation. Binary Authorization refers to the restriction on signed artifact characteristics manifested within an attestation. Binary Authorization policies can be configured such that, for example, only artifacts built by Google Cloud Build are allowed. If an artifact does not satisfy the policies, it cannot be deployed to the target. Google also offers a dry-run mode for Binary Authorization, where the proper functioning of the policies can be tested. Policy failures are documented in the logs, but they are not enforced during deployment.

As most Google products are connected well, it is easy to use Cloud Deploy for deployments to Kubernetes and Cloud Run. Hence, it is again a viable choice within the software supply chain. However, if one is used to GitHub pipelines, it is also fine to stick to them. Otherwise, the new syntax of Google’s pipeline files needs to be learnt.

Runtime

Once the application is deployed and potentially exposed to the public, application teams need to ensure, that any vulnerability found within the dependencies or code of the deployment is fixed as soon as possible. To make sure accountable staff is up to date, the mechanisms described in Artifact Management can be used.

Artifact Provenance for Container Images

In this chapter, we will walk through the provenance creation and verification process. We use a sample application written in Python whose source code is hosted on GitHub. The application is built by a GitHub workflow. For the provenance creation we will use the open-source GitHub action slsa-github-generator for container images. For provenance verification we will use the open-source tool slsa-verifier which has been written in Go.

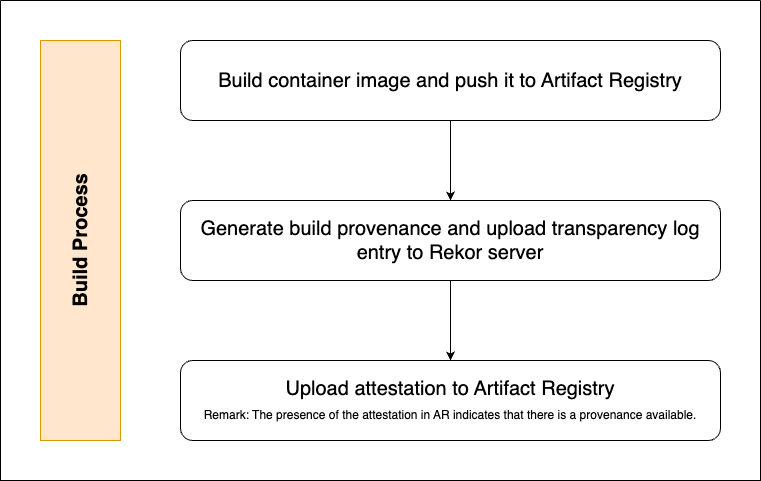

Build Process

Remark: We use the classic authentication method against Google Cloud with service account key files in our example. In a production setup, the new way using workload identity federation is recommended.

Remark: The example GitHub workflow which will be presented in the next sections consists of two jobs: One for building the Docker container image and pushing it to Artifact Registry, the other one for creating the build provenance. In this chapter, we will only show relevant code snippets. On GitHub, one can find the complete workflow code.

Build Container Image and Push It to Artifact Registry

In this example, we make use of GitHub’s provided runners based on ubuntu-latest.

Since our focus is on security, we skip the code of the setup steps in this workflow. The first two steps are: checkout code and log in to the Artifact Registry.

Afterwards, we make use of the docker/metadata-action action which provides us with tags and labels for our Docker image based on Git reference and GitHub events.

- name: Extract Metadata (tags, labels) for Docker

id: meta

uses: docker/metadata-action@69f6fc9d46f2f8bf0d5491e4aabe0bb8c6a4678a # v4.0.1

with:

images: ${{ env.IMAGE_NAME }}

As you may have noticed, we do not reference GitHub actions by version tags. Instead, we use commit SHAs. This is also a security aspect as Git tags can be overridden whereas commit SHAs are unique and difficult to fake. The reliance on this security feature is crucial as the used actions are documented in the build provenance and one requirement of the SLSA specification is that the exact same artifact can be built by reapplying the steps written in the provenance.

Next, the container image can be built and pushed to Artifact Registry. Notice the reference on the previously used metadata action for creating the image tags and labels.

- name: Build and Push Docker Image

id: docker-build

uses: docker/build-push-action@3b5e8027fcad23fda98b2e3ac259d8d67585f671 # v4.0.0

with:

push: true

tags: ${{ steps.meta.outputs.tags }}

labels: ${{ steps.meta.outputs.labels }}

Finally, we need to provide the image name as job output. Since job outputs cannot access environment variables, we need to append the name to GITHUB_OUTPUT. Additionally, we output the image’s digest which can be retrieved from the build step.

- name: Image Name

shell: bash

id: image-name

run: echo "image_name=$IMAGE_NAME" >> "$GITHUB_OUTPUT"

outputs:

digest: ${{ steps.docker-build.outputs.digest }}

image: ${{ steps.image-name.outputs.image_name }}

Having an artifact in our artifact store, we can move on to the provenance creation.

Provenance Creation

Using the open source slsa-github-generator workflow, creating a build provenance is an easy task. All we need to do is to provide the data prepared in the first job and feed it to the workflow.

provenance:

uses: slsa-framework/slsa-github-generator/.github/workflows/generator_container_slsa3.yml@v1.5.0

with:

image: ${{ needs.build-and-push.outputs.image }}

digest: ${{ needs.build-and-push.outputs.digest }}

registry-username: _json_key

private-repository: true

secrets:

registry-password: ${{ secrets.GOOGLE_APPLICATION_CREDENTIALS }}

Contrary to the above-described need of using commit SHAs when referencing GitHub actions and workflows, here we are supposed to use the Git tags. Otherwise, you will run into an error.

Upload of Transparency Log Entry and Attestation

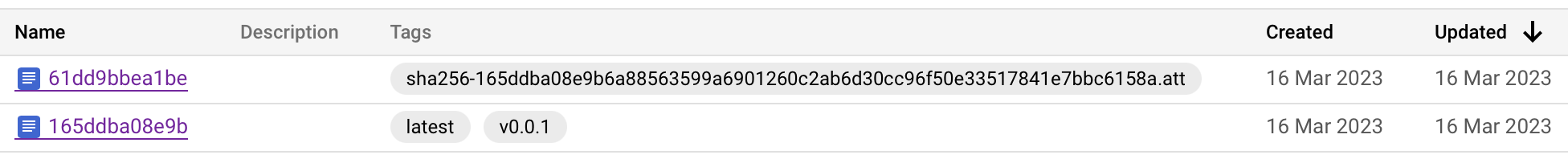

As part of the provenance creation job, a so-called transparency log entry is uploaded to the public Rekor server. The log entry can be fetched by consumers to verify the authenticity of the corresponding artifact. An attestation is uploaded to Artifact Registry next to the container image. The presence of the attestation indicates that a build provenance exists and can be requested to verify the container image.

The bottom entry is the container image. The top entry is the corresponding attestation. If you look carefully, you will notice that the attestation’s tag is of the form sha256-<ARTIFACT_HASH>.att. That way the artifacts and their respective attestations can be matched.

In the next section, we will show you how to perform the verification.

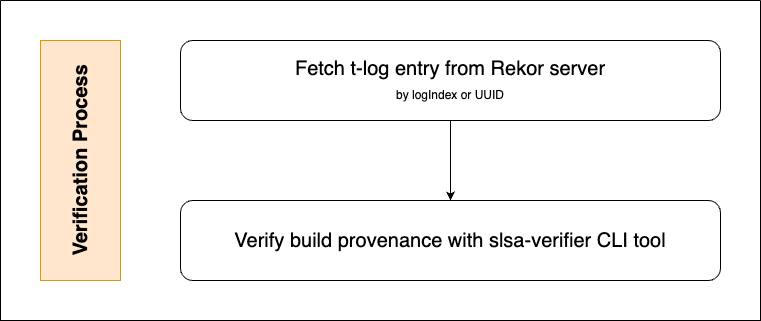

Verification Process

Get Transparency Log Entry

To fetch the transparency log entry from the Rekor server you can request the log entry by its UUID. First, you need to get the container image’s digest by running the following command.

gcloud artifacts docker images describe \

europe-west1-docker.pkg.dev/sw-delivery-shield/containers/python-application:v0.0.1 \

--format 'value(image_summary.digest)'

Then, you can look for the log entry’s UUID by sending the following request against the Rekor server along with the image’s digest in the body.

curl --location 'https://rekor.sigstore.dev/api/v1/index/retrieve' \

--header 'Content-Type: application/json' \

--data '{

"hash": "sha256:165ddba08e9b6a88563599a6901260c2ab6d30cc96f50e33517841e7bbc6158a"

}

'

Finally, the transparency log entry can be requested providing its logId.

curl --location 'https://rekor.sigstore.dev/api/v1/log/entries/24296fb24b8ad77a140305e5ee0adfbff96f168ef08452960cf134caf41fa0bb9b6bd4898ddb4b2c'Let us look at the example transparency log entry which the Rekor server sends with the response body.

{

"<t-log entry UUID>": {

"attestation": {

"data": "<base64 encoded, signed provenance>"

},

"body": "<hashes and public key>",

"integratedTime": 1678973743,

"logID": "c0d23d6ad406973f9559f3ba2d1ca01f84147d8ffc5b8445c224f98b9591801d",

"logIndex": 15563990,

"verification": {

"inclusionProof": { ... },

"signedEntryTimestamp": "MEYCIQDIpSHhrNHkYHilrFpW17gQtlRO/y6PwlvYHSjG08GXkAIhANqbg8hDaxSgIHRt44rXyX+mFDYQcP2CU+6ZxCA33IwB"

}

}

}

The relevant data we are interested in is the signed build provenance in <UUID>.attestation.data. We save the provenance in a file called rekor-provenance.b64. Next, we can verify it using the slsa-verifier.

Verification of the Build Provenance

IMAGE="europe-west1-docker.pkg.dev/sw-delivery-shield/containers/python-application@sha256:165ddba08e9b6a88563599a6901260c2ab6d30cc96f50e33517841e7bbc6158a"

go run ./cli/slsa-verifier verify-image "$IMAGE" \

--provenance-path ../slsa/python-application/rekor-provenance.json \

--source-uri "github.com/Jash-ugan/sw-delivery-shield-intermission-mar2023" \

--builder-id="https://github.com/slsa-framework/slsa-github-generator/.github/workflows/generator_container_slsa3.yml@refs/tags/v1.5.0"

Verified build using builder https://github.com/slsa-framework/slsa-github-generator/.github/workflows/generator_container_slsa3.yml@refs/tags/v1.5.0 at commit 7be2d26cd65999e9cb8fd455f4bbcf12088a8ec9

PASSED: Verified SLSA provenance

Goal reached! We have built an artifact and successfully verified its origin.

In the following, we list some additional examples for completeness.

- Let us see how a failed verification would look like by verifying against an invalid tag.

go run ./cli/slsa-verifier verify-image "$IMAGE" \

--provenance-path ./rekor-provenance.json \

--source-uri "github.com/Jash-ugan/sw-delivery-shield-intermission-mar2023" \

--source-tag fd8598b \

--builder-id="https://github.com/slsa-framework/slsa-github-generator/.github/workflows/generator_container_slsa3.yml@refs/tags/v1.5.0" \

--print-provenance

FAILED: SLSA verification failed: expected tag 'refs/tags/fd8598b', got 'refs/tags/v0.0.1': tag used to generate the binary does not match provenance

exit status 1

- If we had used Google Cloud Build, we would verify against https://cloudbuild.googleapis.com/GoogleHostedWorker as builder ID.

- Verifying against tags was not supported for Google Cloud Build as of March 2023.

Important Remark On The SLSA Build Level

While the used slsa-generator supports the generation of SLSA build level 3 provenances, our presented approach does not fulfill level 3. This is due to the fact that we generate the provenance after the build job has finished. To meet the level 3 requirements, the provenance needs to be generated as part of the build job, meaning, it needs to be included in the docker/build-push-action action. This shows why it requires high efforts to reach level 3 of the SLSA specification.

Conclusion

In highly regulated industries, leveraging state-of-the-art technology within new or existing products is challenging. Securing operationalized and new artifacts within the supply chain, while providing a secure and compliant development and deployment environment as well as meeting necessary connectivity requirements towards the chosen cloud provider, is a big hurdle to overcome before teams can start developing cloud applications. As we have seen in the last sections, this first obstruction when migrating to or starting in the cloud can be eased by leveraging Google’s Software Delivery Shield to provide a fully managed solution from the compliant development environment via CI/CD to runtime.

Google’s Software Delivery Shield is a holistic suite for companies to boost their speed when starting new projects in a cloud environment. State-of-the-art security mechanisms and customizable environments support teams in their pursuit of compliance and security while maintaining an efficient development environment. Unfortunately, the stack does not provide a solution for a proper holistic vulnerability management to track existing findings through the versions of the build artifact. Although the stack is still in preview, a high maturity can be reached by swapping out components like the code repository with industry de facto standards like GitHub. With certain features to come, existing features to mature, and the flexibility of exchanging certain technologies, Software Delivery Shield can be leveraged by projects, addressing a range of difficult requirements with ease.