Introduction

Microservice architectures have become a popular choice for agile software development with vertical feature teams. Core to most of them is a method for directing ingress traffic to different services. In this article, we explore implementing a traffic distribution mechanism for a microservices-based HTTP backend for an online banking application running on Google Cloud Run. The Google Cloud has a proven track record in the financial industry and with Cloud Run, its fully managed offering for running containers, operational expenses can be kept to a minimum.

This article kicks off by elaborating on Canary Deployments and their benefits. It then examines various possible traffic distribution options for an online banking application running in Google Cloud. A detailed explanation is given for the selection of Cloud Load Balancing. Even though Cloud Load Balancing does not have out-of-the-box canary support, a functional approach relying on its supported features is presented and automated via Infrastructure as Code. The article concludes by offering insights into essential considerations for successfully implementing canary deployments.

What are Canary Deployments?

Canary Deployments are an approach to release new software gradually by first deploying it to a small, controlled user group before making it available to all users. This is accomplished by the ability to deploy multiple versions of the same microservice and by providing a way to direct incoming requests to these different versions. This enables the following use cases:

-

- Rollout of a production release to an internal tester group to conduct an end-to-end business verification test. After the test is passed, the release can be rolled out to all end users. This approach holds particular significance in the banking sector, where testing environments may occasionally lack connectivity to representative third-party systems and thus, in these special cases, an end-to-end test on production is desired before final sign-off is given.

The tester group is typically comprised of feature team representatives. The end-to-end test can be fully or partially automated. - Test a new service version on a small percentage of production users (e.g. just 1% or 10%) to reduce the business impact of possible failures which could not be detected on lower environments.

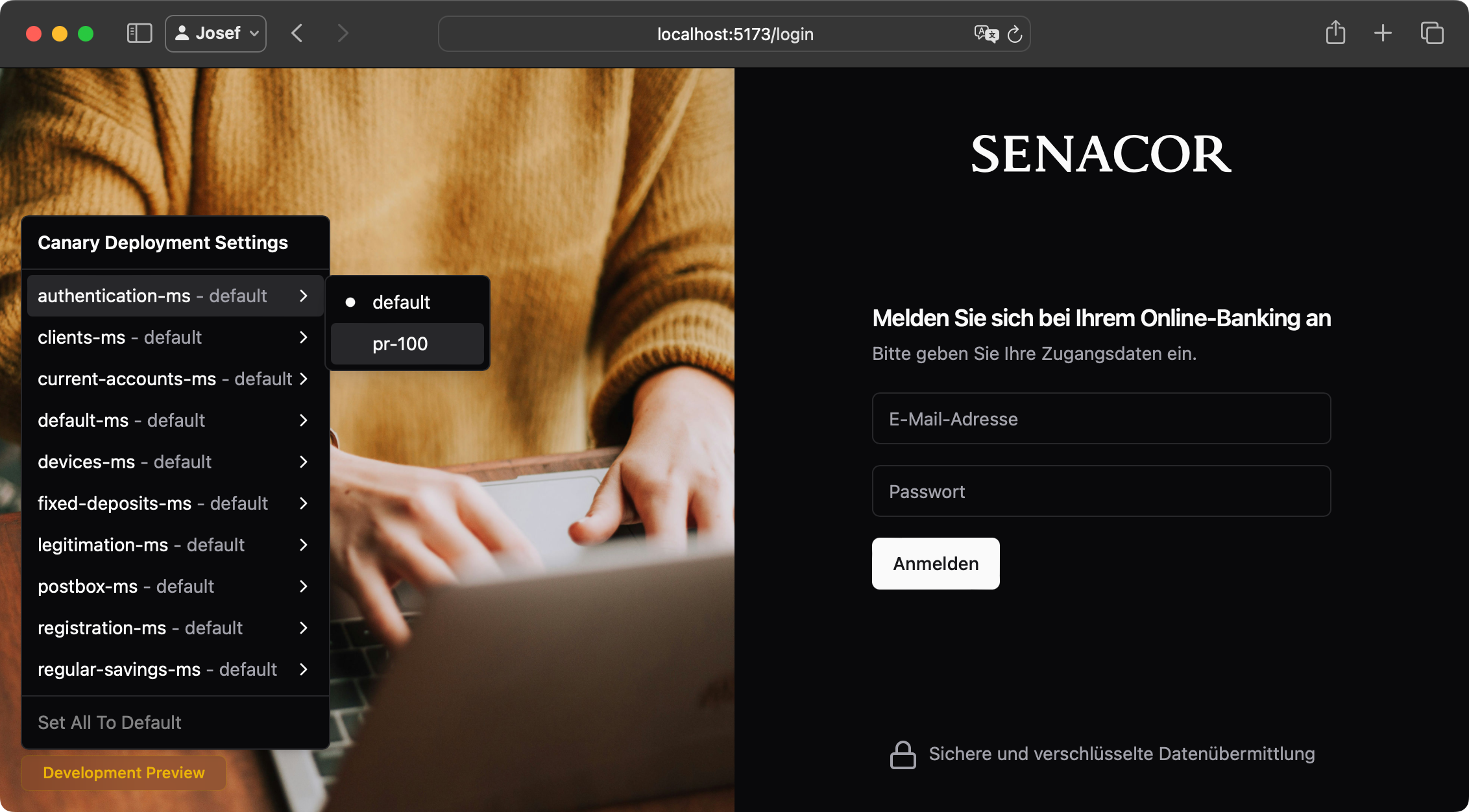

- During development, preview deployments for feature branches enable end-to-end testing during development without having to merge unfinished code early.

- Rollout of a production release to an internal tester group to conduct an end-to-end business verification test. After the test is passed, the release can be rolled out to all end users. This approach holds particular significance in the banking sector, where testing environments may occasionally lack connectivity to representative third-party systems and thus, in these special cases, an end-to-end test on production is desired before final sign-off is given.

Load Balancing Options in the Google Cloud

This section gives an overview over five product options available in Google Cloud for distributing traffic to microservices:

-

- Service Mesh, recently renamed from Anthos Service Mesh and now part of GKE Enterprise, is Google’s managed variant of the open-source Istio service mesh. Same as Istio, it is packed with advanced routing capabilities for routing traffic to microservices and an excellent choice when running microservices on Kubernetes. Service Mesh is supported by VPC service controls (a particular kind of firewall to prevent data exfiltration) and thus suitable for applications with very strict security requirements. Its only downside is that it cannot be operated purely on serverless products like Cloud Run and Cloud Functions.

- Apigee is Google’s fully featured API management solution and capable of distributing traffic to microservices. However, at time of writing, it cannot be fully automated using an Infrastructure as Code approach. Critical resources, such as the google_apigee_api_proxy resource, are not yet available. Apigee’s unique strength is that it offers advanced features for securing and productizing APIs that the other solutions don’t offer. On the flip side this means that it only really reveals its strengths when such features are a requirement. Apigee is supported by VPC service controls.

- API Gateway is a fully managed product and suited very well for distributing traffic to microservices running on serverless backends like Cloud Run and Cloud Functions. Its routing can be configured via an OpenAPI document making it elegant and lightweight to configure and also easy to automate with Terraform. Unfortunately, API Gateway is not supported by VPC service controls making it unsuitable for applications with strict security requirements, e.g. in the financial sector.

- Cloud Endpoints is the self-hosted variant of API gateway and is also configured via an OpenAPI document. The traffic distribution is handled by a self-hosted proxy that can be operated on Cloud Run, GKE, compute engine and other options. Unfortunately, Cloud Endpoints is also not supported by VPC service controls.

- Finally, an External Global Application Load Balancer with its core piece, the URL map, supports distributing traffic to many backends and offers customizable routing logic. Automation via Infrastructure as Code is supported and works very well, albeit it involves more resources than API Gateway and has a steeper learning curve. VPC service controls support is also present. Notably, even when deploying other solutions like Apigee and API Gateway, a Google Load Balancer is often deployed in front to secure the application with Cloud Armor security policies or equip it with a custom domain name.

To dive deeper into how to Apigee, API Gateway and Cloud Endpoints compare, refer to this article on the Google Cloud blog.

In this article, we will work with a microservice-based online banking application running on Cloud Run. Considering the five options explained above, this narrows down the product choice: As VPC service controls are usually considered a must-have for financial applications, API Gateway and Cloud Endpoints are dismissed due to their lack of support. Apigee is dismissed due to its difficulty to automate the deployment/configuration and Service Mesh is dismissed because the target application is not deployed to Kubernetes. Therefore, Google Cloud Load Balancing emerges as the most optimal routing solution for this use case.

Distributing traffic to microservices with Google Cloud Load Balancing

Having decided to utilize a Google Cloud load balancer for distributing HTTP traffic to the microservices, we will construct a suitable architecture in the following section. While Google Cloud load balancers do not come equipped with native canary support, we are able to create a functional approach by leveraging its ability to match HTTP headers.

The following picture shows the high-level architecture:

-

- Each microservice variant is deployed as a Cloud Run service.

- HTTP Requests from the frontend are routed according to path prefixes. For example:

/firstmatches requests to URL paths starting in/first, i.e. longer paths like/first/some-suffixalso get matched. If multiple prefixes match, the longer, more specific, prefix has precedence.

Implementation note: This can be implemented using the URL map’spathMatchers[].routeRules[].matchRules[].prefixMatchfield and assigning higher priority to route rules for longer, more specific, paths. Lower number equals higher priority. More details here. - Finally, the value of a special canary HTTP header, unique for each service, is compared. If the header is set, the request is routed to the matching microservice variant, if not, the request is routed to the default, non-canary microservice for this path.

Implementation note: This can be implemented using the URL map’spathMatchers[].routeRules[].matchRules[].headerMatches[].exactMatchfield. More details here.

The previous high-level view depicted the load balancer in a simplified manner. The following picture taken from the Load Balancing documentation shows a break-down of the involved Google resources forming a load balancer:

The central piece is the URL map. It is responsible for the routing logic. It and everything in front of it exists only once. In contrast, the backend service, serverless network endpoint group and Cloud Run service exist once per microservice variant. All put together, these resources form a load balancer capable of distributing incoming traffic to the appropriate microservices and, when certain HTTP headers are set, to their canary variants.

Deployment via Infrastructure as Code

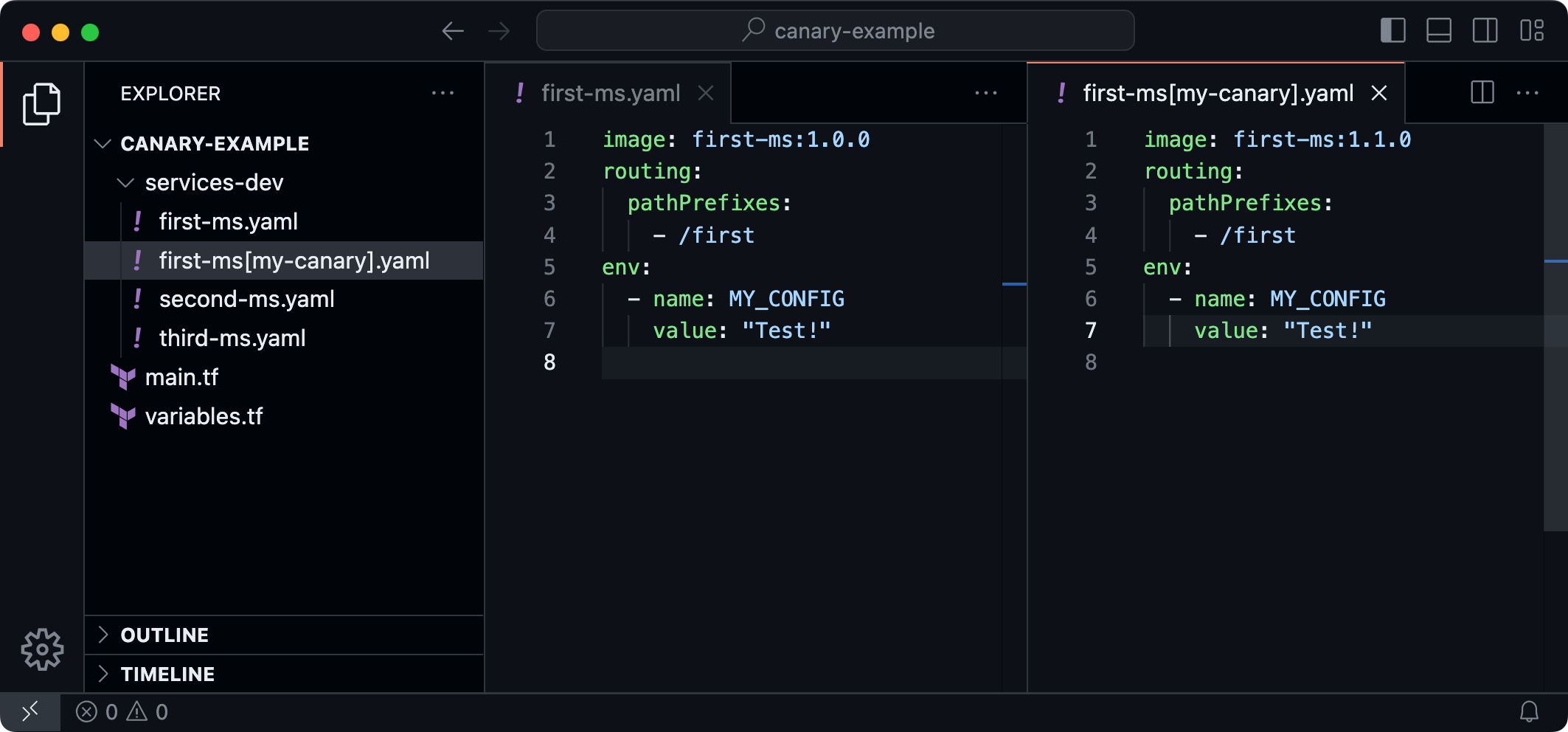

The following picture shows the layout of the repository for deploying the infrastructure:

-

- In a central repository, every microservice variant gets its own YAML file containing its configuration. This includes its container image, path prefixes for routing and env var values.

- Canaries can be identified by the square brackets in the file name specifying the header value used to match the canary. This naming convention makes it easy to identify canary deployments by their file names.

- And finally, a custom Terraform code implementation deploys the load balancer and the Cloud Run services. The Code uses Terraform’s fileset() and file() functions to load all YAML files to then create the Google resources using the Google Terraform Provider The key pieces are the google_cloud_run_v2_service resources and the google_compute_url_map resource.

The code is available on GitHub.

This setup was chosen for a few reasons. In Google Cloud Load Balancing, all routing logic has to be in one place, the URL map. Having all microservice configurations in one Git repository makes it easy to access all the necessary info. Using just one Git repo also makes it simple to un- or redeploy all services. To update or add a service, only one file needs to be changed. You can even automate some tasks, like when a pull request is opened in a microservice repo, it can automatically make a commit in the deployment repo to deploy a preview build of the service. And vice versa, if the pull request in the microservice repository is closed, the change is removed. Having separate files for each service helps avoid conflicts when merging. This setup works well with other routing solutions like GKE Enterprise Service Mesh; although in that case, using a Kubernetes-specific GitOps solution like GKE Enterprise Config Sync, Argo CD or Flux CD might be better for deployment.

Outlook

In this section we’ll cover topics that were not the focus of this blog post but are important considerations.

Percentage-based canaries, feature flags

The first topic is canaries targeting a percentage of the end users, e.g. 10%. Depending on the exact requirements there are multiple options to get this implemented. Typically, one would want to choose which users get the canary at random, but for any given individual user that is served a canary, all subsequent requests should also be routed to the canary. This could be achieved by specifying weightedBackendServices in the load balancer’s url map in combination with sessionAffinity on the backend service. Another approach could be to handle the segmentation of the users via a feature flagging solution integrated into the frontend. This has the advantage that it allows for more control. Various options for feature flag providers can be found on the CNCF landscape.

Security posture

A further aspect to consider is security: The approach presented in this blog post assumes that any canaries deployed to production are production-ready. It needs to be considered that a malicious actor can potentially deliberately edit the canary HTTP headers they send. This doesn’t affect the security posture if all canaries are required to follow the same security standards as any other microservices in production. Nevertheless, it is also possible to lock this further down by only serving canaries to internal users coming from a certain IP range.

Database Migrations

For any deployment strategy allowing multiple service versions to run at the same time, database schema compatibility is an important concern. Luckily, important use cases for canaries, like testing a new microservice version after a large refactoring or after updating dependencies, bypass the need for a database migration. For the remaining use cases there’s a lot of nuances to the topic beyond the scope of this blog post.

Relation to A/B Testing

Canary deployments are closely related to the concept of A/B testing. Both involve releasing new features or updates to a subset of users before rolling it out to the entire audience. Canary deployments are typically used to achieve technical goals like identifying bugs or performance regressions. In contrast, A/B testing is a tool to measure the business performance of new features, often also over a longer period of time. To learn more about this topic, please check out the Canary Release and Feature Toggles blog posts on the Martin Fowler Blog.

Conclusion

To wrap up, this blog post explored the various traffic distribution options for microservices in the Google Cloud. For our chosen use case of an online banking application running on Cloud Run, Cloud Load balancing was chosen as the most suitable traffic distribution solution. Even though Google’s load balancers do not have out-of-the-box canary support, we can see that a functional approach relying on the matching of HTTP headers is possible. Canary deployments are a valuable tool to safely test and roll out new software updates while minimizing any impact on end users. By strategically implementing canary deployments, the impact of any unforeseen issues can be minimized resulting in an overall better user experience during software updates.

Special thanks go to my fellow colleagues for their feedback on this article: Simon Holzmann, Max Rigling, Bastian Hafer and Alexander Münch.